import gym

import matplotlib

import numpy as np

import sys

from collections import defaultdict

import pprint as pp

from matplotlib import pyplot as plt

%matplotlib inline

import itertools

from matplotlib import cm, colors1. Introduction

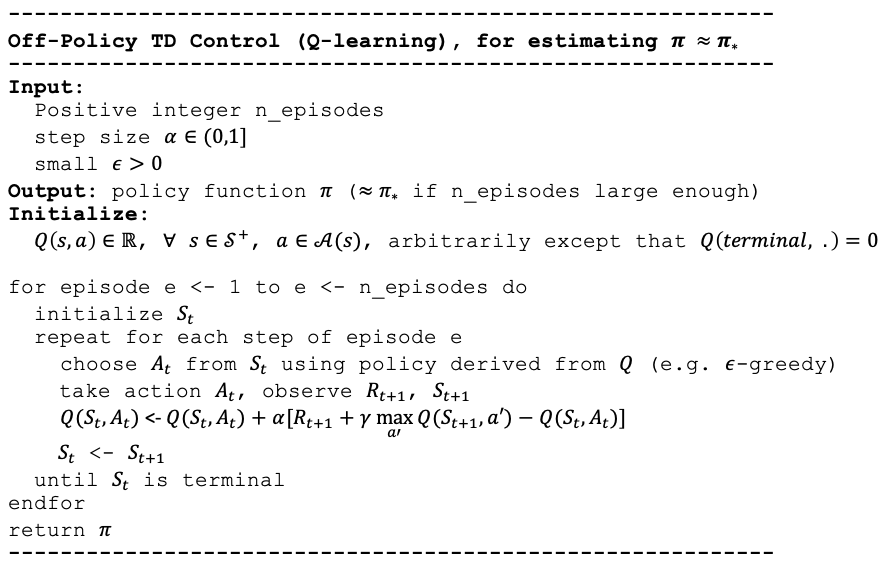

In a Markov Decision Process (Figure 1) the agent and environment interacts continuously.

More details are available in Reinforcement Learning: An Introduction by Sutton and Barto.

The dynamics of the MDP is given by

The policy of an agent is a mapping from the current state of the environment to an action that the agent needs to take in this state. Formally, a policy is given by

The discounted return is given by

Most reinforcement learning algorithms involve the estimation of value functions - in our present case, the state-value function. The state-value function maps each state to a measure of “how good it is to be in that state” in terms of expected rewards. Formally, the state-value function, under policy

The Monte Carlo algorithm discussed in this post will numerically estimate

2. Environment

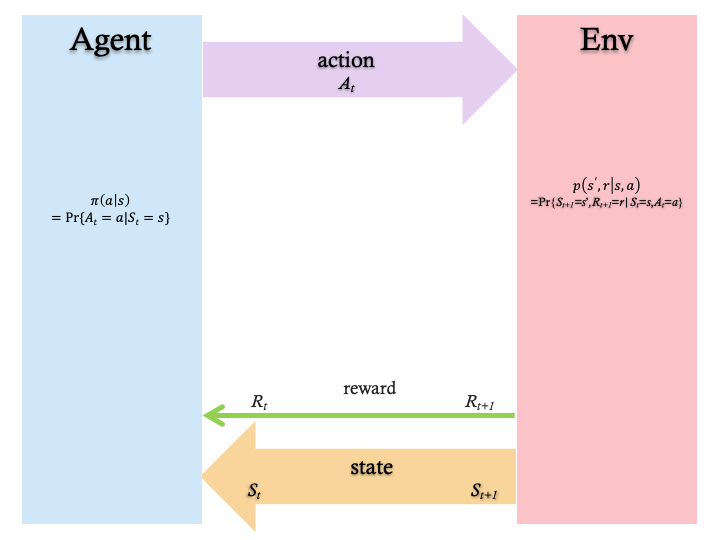

Figure 2 shows the environment we will use in this series: The Windy Gridworld:

Each episode starts in the start state, S and the agent tries to get to the goal state, G in as few steps as possible. There are four movements or actions that can be applied to the environment by the agent:

- up (0)

- right (1)

- down (2)

- left (3)

There is a crosswind running upward through the middle of the grid. The strength of the crosswind is indicated in the center columns of the grid. The strength is added to the vertical displacement of a movement or action, based on the strength indicated for the departing state. For example, if you are one cell to the tight of the goal, the action left will take you to the cell just above the goal.

Tasks are episodic and undiscounted. All rewards are -1 until the goal is reached.

States are numbered from 0 to 69 in this 7 by 10 grid, in a row-wise fashion starting in the top-left corner.

The environment is implemented using the OpenAI Gym library.

3. Agent

The agent is the decision maker. It needs to provide instructions to reach the goal in as few steps as possible while compensating for the effect of the wind. After observing the state of the environment, expressed as a number from 0 to 69 that reflects the current position in the grid, the agent can take one of the following actions:

- up (0)

- right (1)

- down (2)

- left (3)

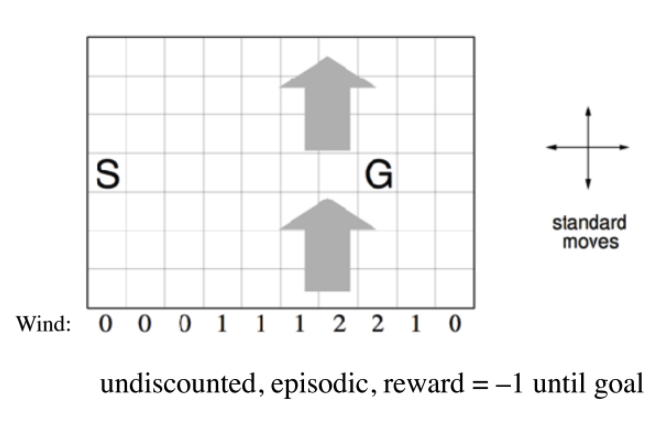

4. Temporal Difference (TD) Control with Q-learning

One of the early breakthroughs in reinforcement learning came in 1989 when Watkins introduced it. This is an off-policy TD control algorithm. It makes the following update:

The target in this update is:

In this algorithm the learned action-value function,

5. Implementation

Figure 2 shows the algorithm:

Next, we present the code that implements the algorithm.

env = WindyGridworldEnv()5.1 Policy

The following function captures the policy used by the agent:

def create_policy_epsilon_greedy(Q, epsilon, n_A):

def policy_function(observation):

action_probs = np.ones(n_A, dtype=float)*epsilon/n_A

best_action = np.argmax(Q[observation])

#probabilities for each action, length n_A:

action_probs[best_action] += (1.0 - epsilon)

return np.random.choice(np.arange(len(action_probs)), p=action_probs)

return policy_function5.3 Main loop

The following function implements the main loop of the algorithm. It iterates for n_episodes. It also takes a list of monitored_state_actions for which it will record the evolution of action values. This is handy for showing how action values converge during the process.

def td_control_qlearning(env, n_episodes, gamma=1.0, alpha=0.5, epsilon=0.1, monitored_state_actions=None, diag=False):

Q = defaultdict(lambda: np.zeros(env.action_space.n))

pi = create_policy_epsilon_greedy(Q, epsilon, env.action_space.n)

monitored_state_action_values = defaultdict(list)

stats = myplot.EpisodeStats(

episode_lengths=np.zeros(n_episodes),

episode_rewards=np.zeros(n_episodes))

for i in range(n_episodes):

if (i + 1)%10 == 0: print("\rEpisode {}/{}".format(i + 1, n_episodes), end=""); sys.stdout.flush()

print(f'\nepisode {i}:') if diag else None

#---initialize St

St = env.reset()

#---repeat for each step of episode

for t in itertools.count(): #while True:

#---choose At from St, using policy derived from Q

At = pi(St)

#---take action At, observe Rt+1, St+1

Stp1, Rtp1, done, _ = env.step(At) # St+1, Rt+1 OR s',r

print(f"---t={t} St, At, Rt+1, St+1: {St, At, Rtp1, Stp1}") if diag else None

stats.episode_rewards[i] += Rtp1; stats.episode_lengths[i] = t

#---update Q

# best_next_action = np.argmax(Q[Stp1])

Q[St][At] += alpha*( Rtp1 + gamma*Q[Stp1][np.argmax(Q[Stp1])] - Q[St][At] ); print(f"Q[St][At]: {Q[St][At]}") if diag else None

St = Stp1

if done:

break

#---until St is terminal

if monitored_state_actions:

for msa in monitored_state_actions:

s = msa[0]; a = msa[1]

# print("\rQ[{}]: {}".format(msa, Q[s][a]), end=""); sys.stdout.flush()

monitored_state_action_values[msa].append(Q[s][a])

return Q, stats, monitored_state_action_values5.4 Monitored state-actions

Let’s pick a number of state-actions to monitor. Each tuple captures the state number (0 to 69) and an action (0, 1, 2, 3).

monitored_state_actions = [(0, 1), (7, 3), (57, 2), (68, 0)]Q,stats,monitored_state_action_values = td_control_qlearning(

env,

n_episodes=1,

alpha=0.5,

monitored_state_actions=monitored_state_actions,

diag=False)Qdefaultdict(<function __main__.td_control_qlearning.<locals>.<lambda>>,

{0: array([-2.8125 , -3.49072266, -2.94140625, -3. ]),

1: array([-3.5 , -3.77097797, -3.40185547, -3.41210938]),

2: array([-4. , -4.17954469, -3.83534861, -3.8840332 ]),

3: array([-5. , -4.63183594, -5. , -4.63481559]),

4: array([-5.265625 , -5.41390991, -5.34548903, -5.25840017]),

5: array([-5.75 , -5.60140991, -5.8125 , -5.63739991]),

6: array([-6. , -6.11438866, -6. , -6.15069509]),

7: array([-5.75 , -5.41633144, -5.84375 , -5.76096535]),

8: array([-5.35792048, -4.79130716, -5. , -4.79510498]),

9: array([-3.96582031, -4. , -3.95793663, -4.13354492]),

10: array([-2.671875 , -2.97070312, -2.9296875 , -3. ]),

11: array([-3.1875 , -3.13342285, -2.88378906, -2.92578125]),

12: array([-3.41015625, -3.28710938, -3.18228149, -3.08398438]),

13: array([-4.015625 , -3.18945312, -3.5 , -3.52929688]),

14: array([-3.26834106, -3.3125 , -2.5 , -3.83972931]),

15: array([-2.875 , -3.25 , -0.5 , -3.05931658]),

16: array([-3.48938866, 0. , 0. , 0. ]),

17: array([-3.33740234, -3.59447479, -4.25 , -4.77110767]),

18: array([-4.3907574 , -3.11495686, -3.375 , -4.21875 ]),

19: array([-3.04541016, -3.5 , -3.08167887, -3.44604492]),

20: array([-2.875 , -2.4375 , -2.76171875, -2.5 ]),

21: array([-2.1875 , -2.56640625, -2.4765625 , -2.5 ]),

22: array([-2.68457031, -2.56640625, -2.52832031, -2.46875 ]),

23: array([-3.13543797, -2.5 , -2. , -3.24468994]),

24: array([-2.12890625, -0.875 , -1. , -1.75 ]),

25: array([-3.0148716, -0.5 , 0. , 0. ]),

27: array([-2.625 , -2.47058392, -1.75 , -3.1541338 ]),

28: array([-3.33065492, -2.46311188, -2. , -2.8671875 ]),

29: array([-2.97097492, -2.265625 , -2.32275391, -2.29394531]),

30: array([-2.71875 , -2.25 , -2.37890625, -2.5 ]),

31: array([-1.90625 , -2.2265625, -1.890625 , -2.40625 ]),

32: array([-1.765625 , -1.9140625, -1.921875 , -2.09375 ]),

33: array([-1.75 , -1.3125 , -1.5 , -2.09765625]),

34: array([-0.5, -0.5, -0.5, -1.5]),

37: array([0., 0., 0., 0.]),

38: array([-1.1875 , -1.203125, -1. , -0.9375 ]),

39: array([-1.98828125, -2. , -1.6640625 , -1.65625 ]),

40: array([-2.71875 , -1.8125 , -2.078125, -2. ]),

41: array([-1.625 , -1.25 , -1.4375, -2. ]),

42: array([-1.78125, -1.3125 , -1.25 , -1.125 ]),

43: array([-1.4375, -0.75 , -1. , -1. ]),

44: array([-0.5, 0. , 0. , 0. ]),

45: array([-0.5, 0. , 0. , 0. ]),

48: array([-0.875 , -1.203125, -0.5 , -0.5 ]),

49: array([-1.78125, -1. , -1.125 , -0.875 ]),

50: array([-2.078125, -1.75 , -1.78125 , -1.5 ]),

51: array([-1.375 , -1.4375, -1.6875, -1.6875]),

52: array([-1. , -0.875, -1. , -1.5 ]),

53: array([-0.5 , -0.5 , -0.5 , -1.0625]),

54: array([-0.5, -0.5, 0. , 0. ]),

57: array([0., 0., 0., 0.]),

58: array([-0.5, 0. , 0. , 0. ]),

59: array([-1.125, -0.5 , -0.5 , -0.5 ]),

60: array([-1.5625, -1.625 , -1.5 , -1.5 ]),

61: array([-1.5625, -1.3125, -1.5 , -1.25 ]),

62: array([-1.125, -0.875, -1. , -0.75 ]),

63: array([-0.5 , -0.75, -0.5 , 0. ]),

68: array([0., 0., 0., 0.]),

69: array([-0.5, 0. , 0. , 0. ])})Q[50]array([-2.078125, -1.75 , -1.78125 , -1.5 ])Q[7][0], Q[7][1], Q[7][2], Q[7][3](-5.75, -5.416331440210342, -5.84375, -5.760965347290039)print(monitored_state_actions[0])

print(monitored_state_action_values[monitored_state_actions[0]])(0, 1)

[-3.49072265625]# last value in monitored_state_actions should be value in Q

msa = monitored_state_actions[0]; print('msa:', msa)

s = msa[0]; print('s:', s)

a = msa[1]; print('a:', a)

monitored_state_action_values[msa][-1], Q[s][a] #monitored_stuff[msa] BUT Q[s][a]msa: (0, 1)

s: 0

a: 1(-3.49072265625, -3.49072265625)5.5 Run 1

Q1,stats,monitored_state_action_values1 = td_control_qlearning(

env,

n_episodes=20,

alpha=0.5,

monitored_state_actions=monitored_state_actions,

diag=False)Episode 20/20# last value in monitored_state_actions should be value in Q

msa = monitored_state_actions[0]; print('msa:', msa)

s = msa[0]; print('s:', s)

a = msa[1]; print('a:', a)

monitored_state_action_values1[msa][-1], Q1[s][a] #monitored_stuff[msa] BUT Q[s][a]msa: (0, 1)

s: 0

a: 1(-7.000738032627851, -7.000738032627851)5.5.1 Monitored state-actions

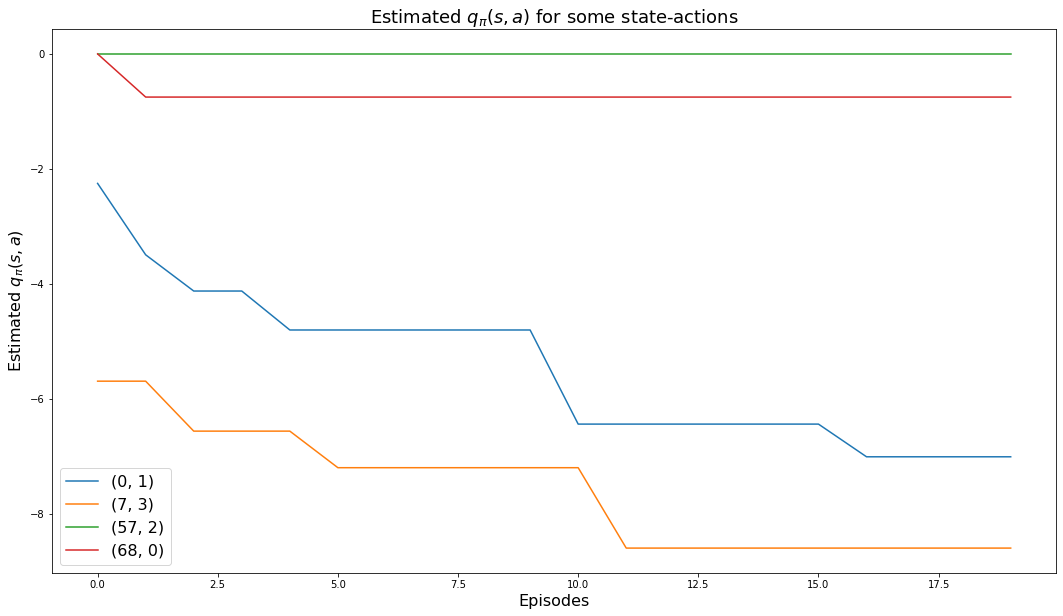

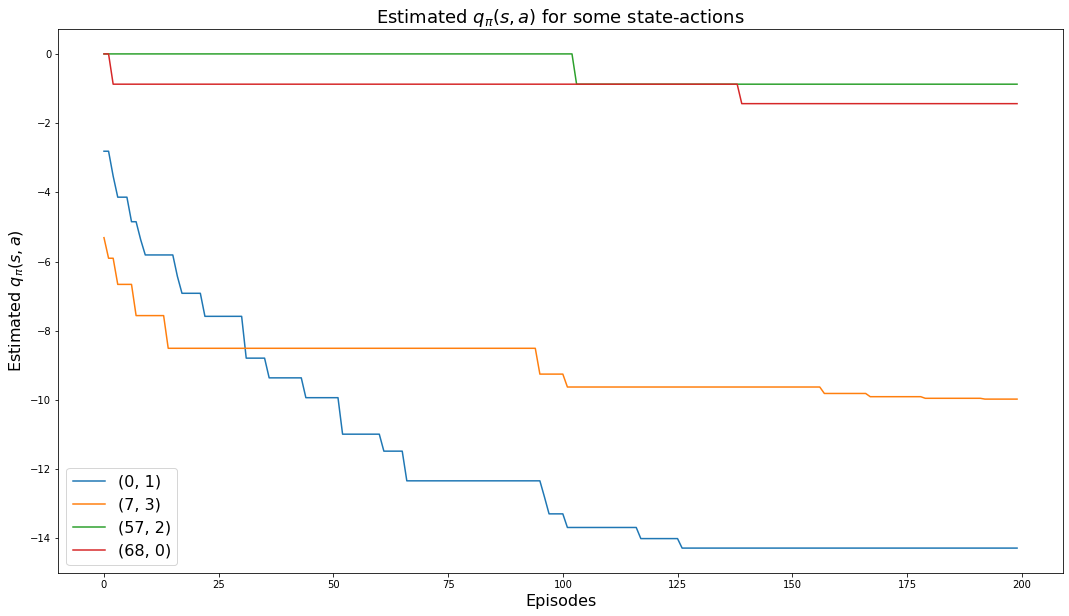

The following chart shows how the values of the 4 monitored state-actions converge to their values:

plt.rcParams["figure.figsize"] = (18,10)

for msa in monitored_state_actions:

plt.plot(monitored_state_action_values1[msa])

plt.title('Estimated $q_\pi(s,a)$ for some state-actions', fontsize=18)

plt.xlabel('Episodes', fontsize=16)

plt.ylabel('Estimated $q_\pi(s,a)$', fontsize=16)

plt.legend(monitored_state_actions, fontsize=16)

plt.show()

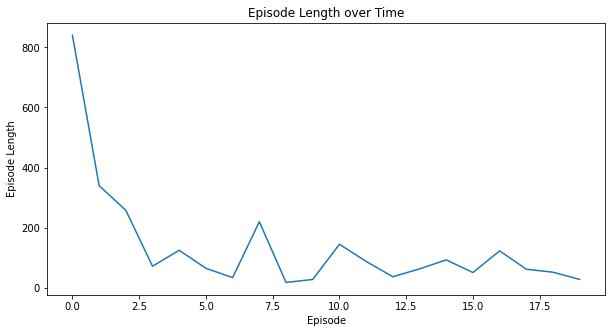

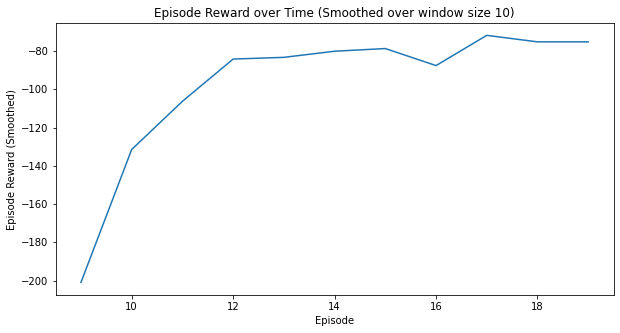

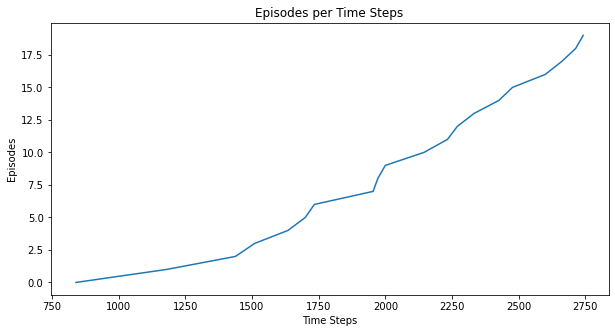

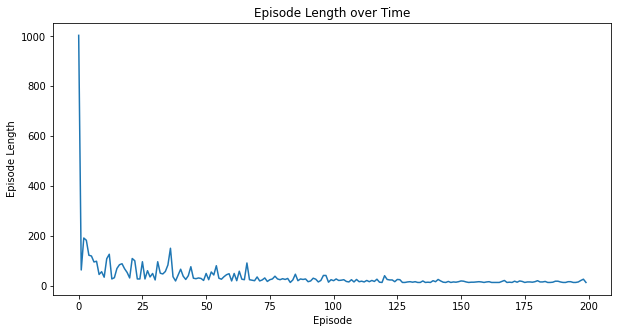

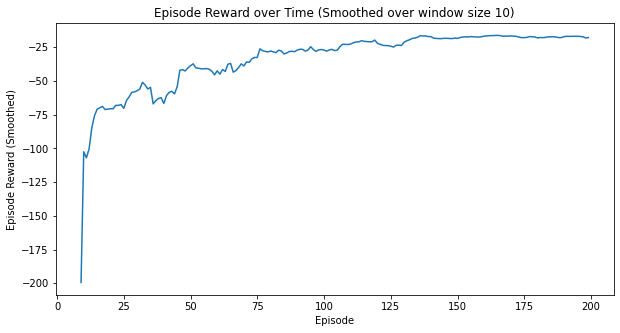

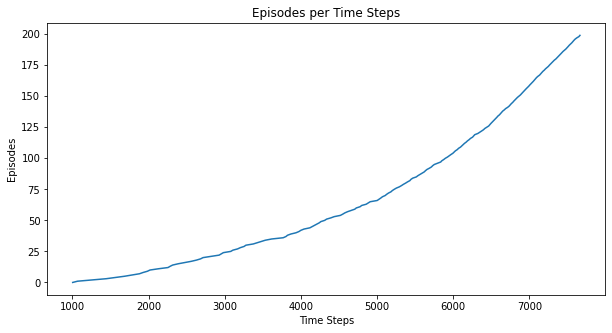

5.5.2 Other metrics

Here are some additional metrics:

myplot.plot_episode_stats(stats);

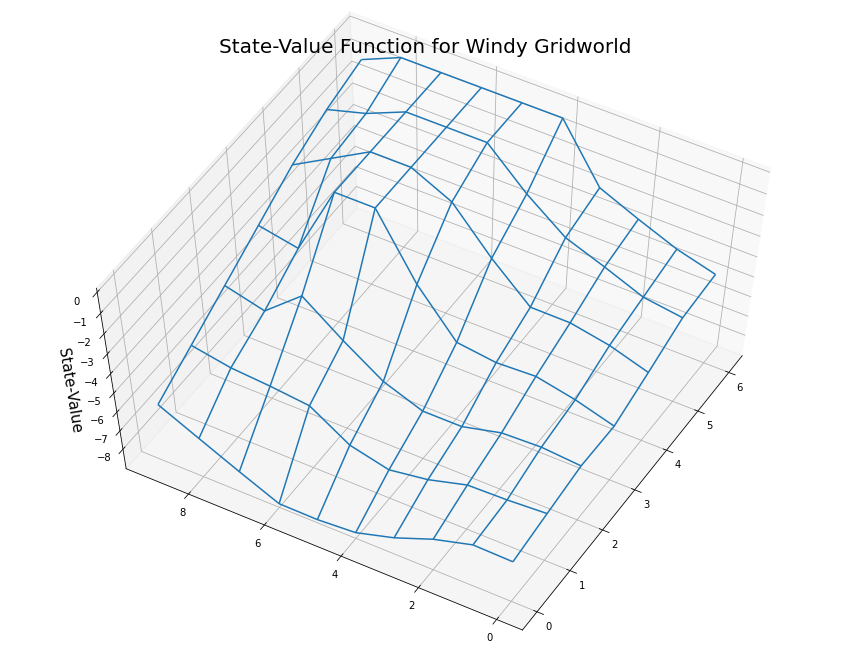

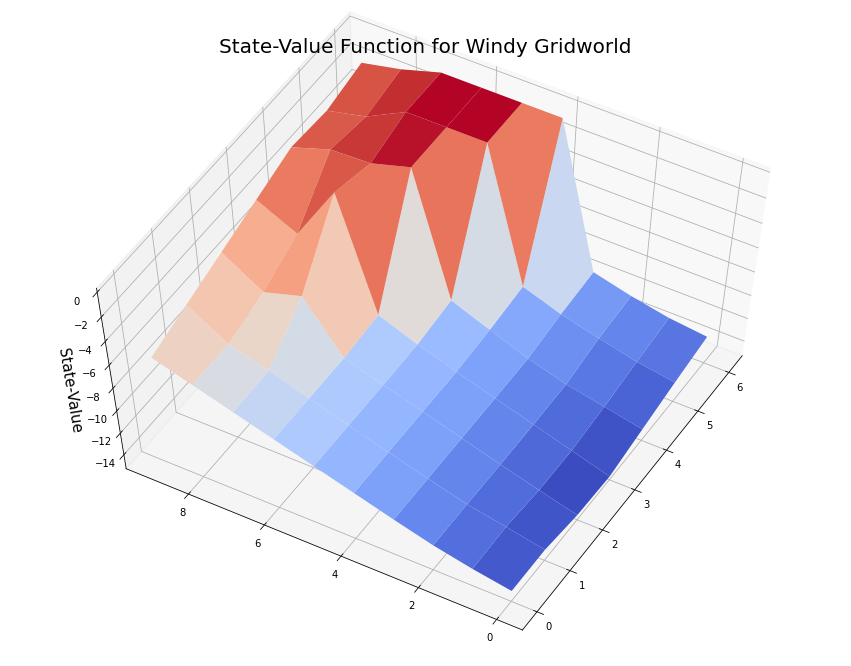

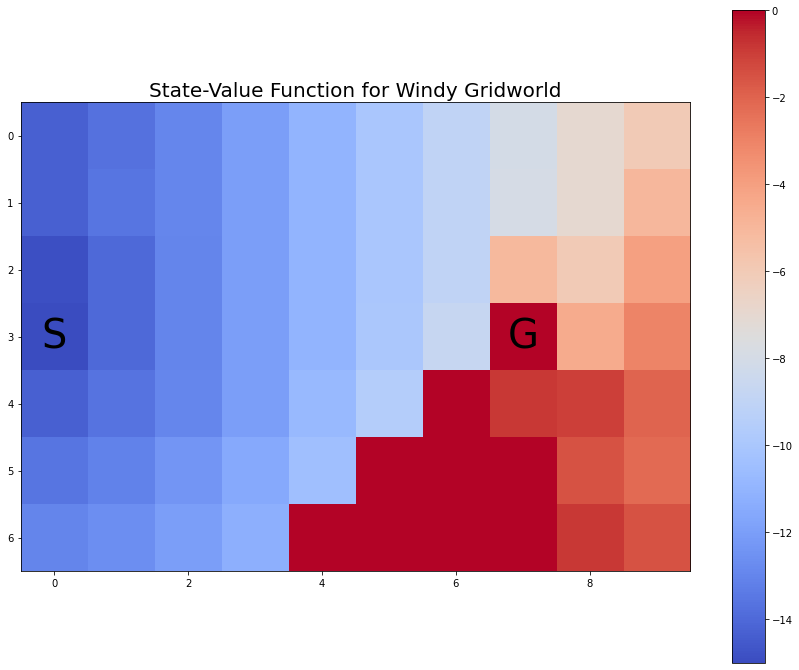

5.5.3 State-value function

To make a plot of the state-value function we derive the state-value function from the action-value function:

# create state-value function from action-value function

V1 = defaultdict(float)

for state, actions in Q1.items():

action_value = np.max(actions)

V1[state] = action_value# convert V1 to V1p for plotting

states_shape = (7, 10)

nS = np.prod(states_shape)

V1p = {}

for s in range(nS):

position = np.unravel_index(s, states_shape); #print(f"position: {position}")

V1p[position] = V1[s]V1p{(0, 0): -6.794443174265325,

(0, 1): -6.75,

(0, 2): -7.335345274743457,

(0, 3): -8.154132374455273,

(0, 4): -8.7578125,

(0, 5): -8.903061628341675,

(0, 6): -8.879239677504732,

(0, 7): -7.948497410531195,

(0, 8): -6.976564460820164,

(0, 9): -5.99234181646386,

(1, 0): -6.431787490844727,

(1, 1): -6.5581399807706475,

(1, 2): -6.610924970967062,

(1, 3): -7.18147534433973,

(1, 4): -7.5,

(1, 5): -7.0,

(1, 6): -5.723040073535287,

(1, 7): -5.510003575880546,

(1, 8): -5.363144869780659,

(1, 9): -4.998378132942372,

(2, 0): -6.083317756652832,

(2, 1): -5.924814701080322,

(2, 2): -6.0003215973993065,

(2, 3): -6.5,

(2, 4): -6.5,

(2, 5): -5.75,

(2, 6): -4.438269967219039,

(2, 7): -2.9863146543502808,

(2, 8): -4.5,

(2, 9): -3.999543551551426,

(3, 0): -6.1389233358204365,

(3, 1): -5.568093121051788,

(3, 2): -5.159965634346008,

(3, 3): -5.25,

(3, 4): -5.0,

(3, 5): -2.875,

(3, 6): 0.0,

(3, 7): 0.0,

(3, 8): -3.3725199811160564,

(3, 9): -2.9999560102878604,

(4, 0): -5.43900203704834,

(4, 1): -4.842230796813965,

(4, 2): -4.4770660400390625,

(4, 3): -4.46392822265625,

(4, 4): -2.8125,

(4, 5): -0.875,

(4, 6): 0.0,

(4, 7): 0.0,

(4, 8): -0.9999990463256836,

(4, 9): -1.9999922811985016,

(5, 0): -4.68505859375,

(5, 1): -4.420445084571838,

(5, 2): -3.68328857421875,

(5, 3): -3.0,

(5, 4): -1.6484375,

(5, 5): 0.0,

(5, 6): 0.0,

(5, 7): 0.0,

(5, 8): -0.75,

(5, 9): -1.25,

(6, 0): -4.5,

(6, 1): -4.0,

(6, 2): -3.282155990600586,

(6, 3): -2.502685546875,

(6, 4): 0.0,

(6, 5): 0.0,

(6, 6): 0.0,

(6, 7): 0.0,

(6, 8): 0.0,

(6, 9): -0.75}myplot.plot_state_value_surface(V1p, title='State-Value Function for Windy Gridworld', wireframe=True, azim=-150, elev=60);/usr/local/lib/python3.7/dist-packages/numpy/core/_asarray.py:136: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify 'dtype=object' when creating the ndarray

return array(a, dtype, copy=False, order=order, subok=True)

myplot.plot_state_value_surface(V1p, title='State-Value Function for Windy Gridworld', wireframe=False, azim=-150, elev=60);

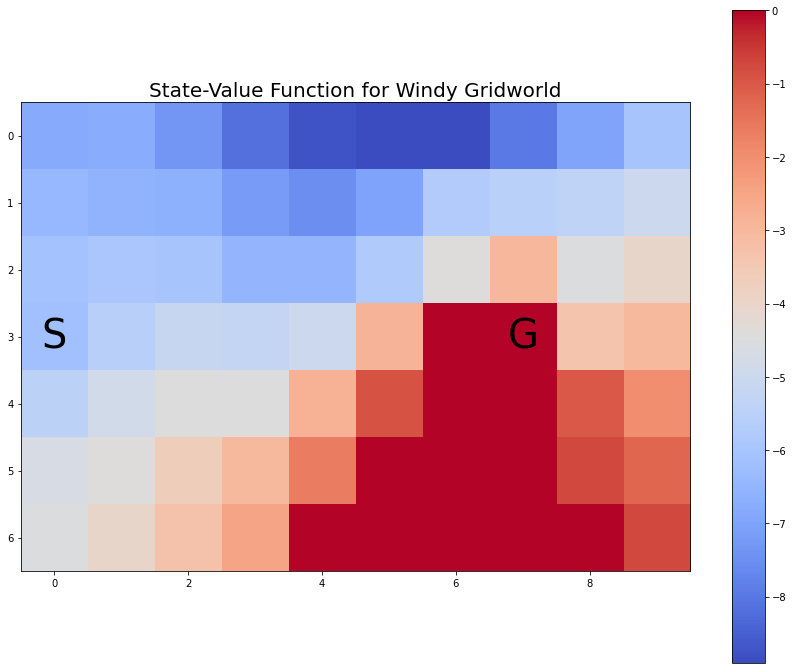

myplot.plot_state_value_heatmap_windy_gridworld(V1p, title='State-Value Function for Windy Gridworld');

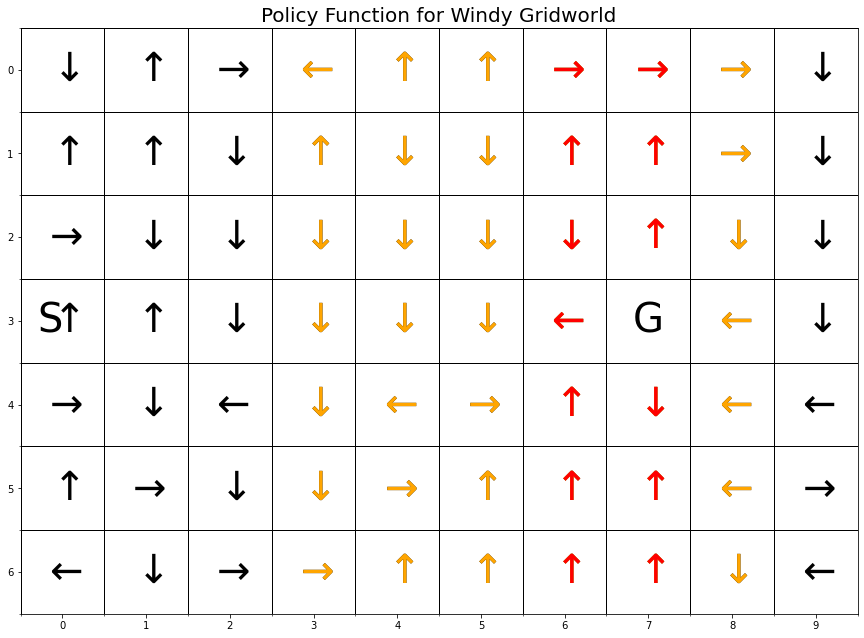

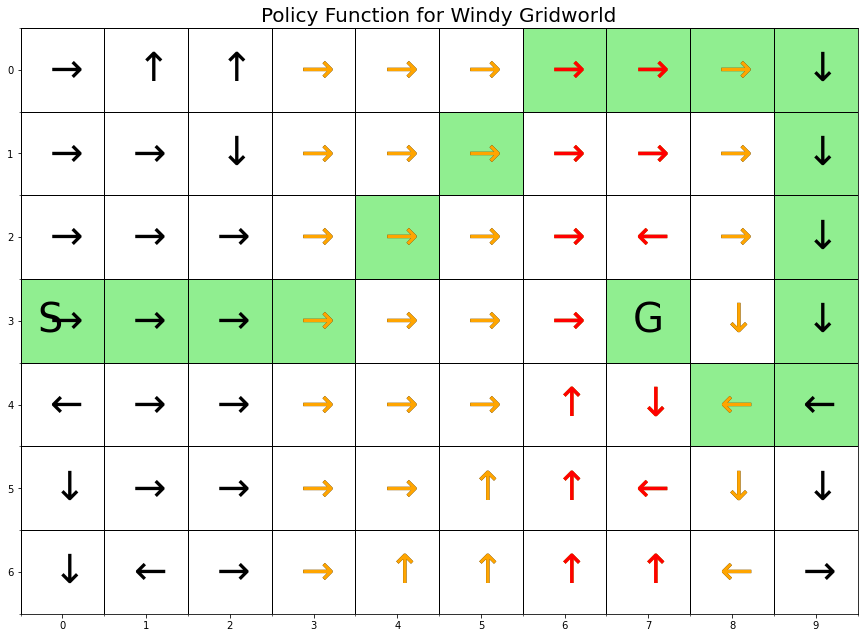

5.5.4 Policy function

# create policy function from action-value function

P1 = defaultdict(float)

for state, actions in Q1.items():

action = np.argmax(actions)

P1[state] = action# convert P1 to P1p for plotting

states_shape = (7, 10)

nS = np.prod(states_shape)

P1p = {}

for s in range(nS):

position = np.unravel_index(s, states_shape); #print(f"position: {position}")

P1p[position] = P1[s]myplot.plot_policy_windy_gridworld(P1p, title='Policy Function for Windy Gridworld');

The graphic above does not show a path from S to G. More training is needed. Note that the states with the orange arrows are subject to an upward wind drift of 1 cell, and the states with the red arrows are subject to an upward drift of 2 cells.

5.6 Run 2

Q2,stats,monitored_state_action_values2 = td_control_qlearning(

env,

n_episodes=200,

alpha=0.5,

monitored_state_actions=monitored_state_actions,

diag=False)Episode 200/200# last value in monitored_state_actions should be value in Q

msa = monitored_state_actions[0]; print('msa:', msa)

s = msa[0]; print('s:', s)

a = msa[1]; print('a:', a)

monitored_state_action_values2[msa][-1], Q2[s][a] #monitored_stuff[msa] BUT Q[s][a]msa: (0, 1)

s: 0

a: 1(-14.282349092018912, -14.282349092018912)5.6.1 Monitored state-actions

The following chart shows how the values of the monitored state-actions converge to their values:

plt.rcParams["figure.figsize"] = (18,10)

for msa in monitored_state_actions:

plt.plot(monitored_state_action_values2[msa])

plt.title('Estimated $q_\pi(s,a)$ for some state-actions', fontsize=18)

plt.xlabel('Episodes', fontsize=16)

plt.ylabel('Estimated $q_\pi(s,a)$', fontsize=16)

plt.legend(monitored_state_actions, fontsize=16)

plt.show()

5.6.2 Other metrics

Here are some additional metrics:

myplot.plot_episode_stats(stats);

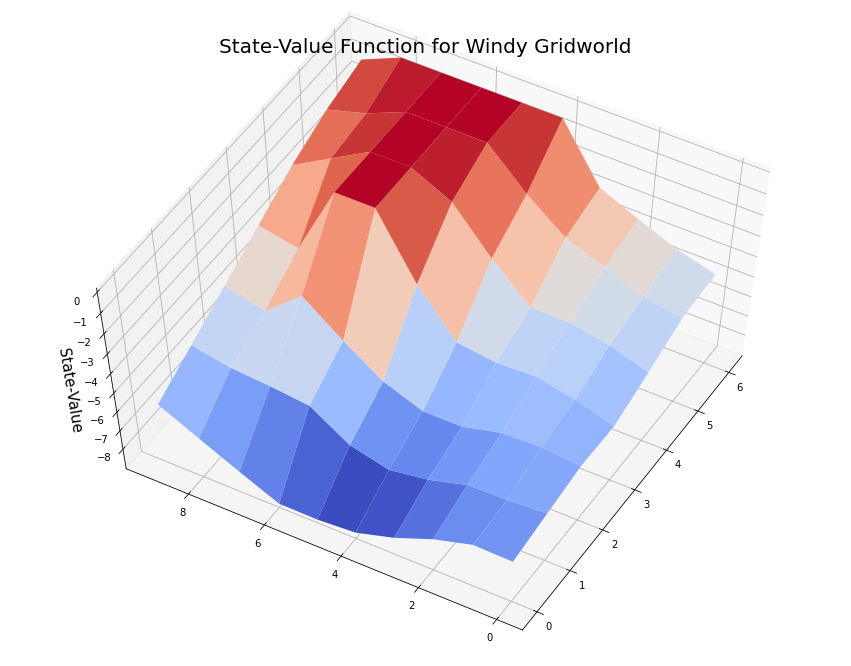

5.6.3 State-value function

To make a plot of the state-value function we reshape the values to align with the Windy Gridworld pattern.

# create state-value function from action-value function

V2 = defaultdict(float)

for state, actions in Q2.items():

action_value = np.max(actions)

V2[state] = action_value# convert V2 to V2p for plotting

states_shape = (7, 10)

nS = np.prod(states_shape)

V2p = {}

for s in range(nS):

position = np.unravel_index(s, states_shape); #print(f"position: {position}")

V2p[position] = V2[s]V2p{(0, 0): -14.282349092018912,

(0, 1): -13.68032807127302,

(0, 2): -12.939003408020197,

(0, 3): -11.99983295310739,

(0, 4): -10.999999999994957,

(0, 5): -10.0,

(0, 6): -9.0,

(0, 7): -8.0,

(0, 8): -7.0,

(0, 9): -6.0,

(1, 0): -14.285350936035444,

(1, 1): -13.584042873715836,

(1, 2): -12.934954023019891,

(1, 3): -11.999654200229319,

(1, 4): -10.99999853995405,

(1, 5): -10.0,

(1, 6): -8.99998610923861,

(1, 7): -7.930270317065352,

(1, 8): -6.982483465456391,

(1, 9): -5.0,

(2, 0): -14.847148483608096,

(2, 1): -13.958161506337575,

(2, 2): -12.999762121348802,

(2, 3): -11.999979043339366,

(2, 4): -11.0,

(2, 5): -9.99977489526516,

(2, 6): -8.994989716305597,

(2, 7): -5.0547588847985025,

(2, 8): -5.991807010623835,

(2, 9): -4.0,

(3, 0): -15.0,

(3, 1): -14.0,

(3, 2): -13.0,

(3, 3): -12.0,

(3, 4): -10.996906094975667,

(3, 5): -9.9309089990189,

(3, 6): -8.695538186843859,

(3, 7): 0.0,

(3, 8): -4.5,

(3, 9): -3.0,

(4, 0): -14.291900411913428,

(4, 1): -13.598108034538605,

(4, 2): -12.936995944519417,

(4, 3): -11.983810920999005,

(4, 4): -10.775889626518168,

(4, 5): -9.523413313493439,

(4, 6): 0.0,

(4, 7): -0.875,

(4, 8): -1.0,

(4, 9): -2.0,

(5, 0): -13.589982753218097,

(5, 1): -13.07459986760809,

(5, 2): -12.314526569868129,

(5, 3): -11.501864930496328,

(5, 4): -10.401542649497674,

(5, 5): 0.0,

(5, 6): 0.0,

(5, 7): 0.0,

(5, 8): -1.5,

(5, 9): -2.197265625,

(6, 0): -12.9719040690361,

(6, 1): -12.614882436826445,

(6, 2): -11.988278396170312,

(6, 3): -11.198276679231789,

(6, 4): 0.0,

(6, 5): 0.0,

(6, 6): 0.0,

(6, 7): 0.0,

(6, 8): -0.875,

(6, 9): -1.5}myplot.plot_state_value_surface(V2p, title='State-Value Function for Windy Gridworld', wireframe=False, azim=-150, elev=60);

myplot.plot_state_value_heatmap_windy_gridworld(V2p, title='State-Value Function for Windy Gridworld');

5.6.4 Policy function

# create policy function from action-value function

P2 = defaultdict(float)

for state, actions in Q2.items():

action = np.argmax(actions)

P2[state] = action# convert P2 to P2p for plotting

states_shape = (7, 10)

nS = np.prod(states_shape)

P2p = {}

for s in range(nS):

position = np.unravel_index(s, states_shape); #print(f"position: {position}")

P2p[position] = P2[s]# hide

def plot_policy_windy_gridworld(P, title='Policy Function', highlight_cells=None, highlight_color='lightgrey'): #.

min_x = min(k[0] for k in P.keys())

max_x = max(k[0] for k in P.keys())

min_y = min(k[1] for k in P.keys())

max_y = max(k[1] for k in P.keys())

x_range = np.arange(min_x, max_x + 1)

y_range = np.arange(min_y, max_y + 1)

X, Y = np.meshgrid(x_range, y_range)

fig = plt.figure(figsize=(15, 12))

ax = fig.add_subplot(1, 1, 1)

ax.set_xlabel('')

ax.set_ylabel('')

ax.set_title(title, fontsize=20)

Z = np.apply_along_axis(lambda _: P[(_[0], _[1])], 2, np.dstack([X,Y]))

Z = np.transpose(Z)

cmap_bg_only = colors.ListedColormap(['white', 'white', 'white', 'white'])

# hightlight cells

if highlight_cells:

Z_bg_with_hilts = np.zeros_like(Z)

for e in highlight_cells: Z_bg_with_hilts[e] = 1

cmap_bg_with_hilts = colors.ListedColormap(['white', highlight_color])

im = ax.imshow(Z_bg_with_hilts, cmap=cmap_bg_with_hilts)

else:

im = ax.imshow(Z, cmap=cmap_bg_only)

# draw arrows

ax.text(0, 3, 'S', ha="right", va="center", fontsize=40)

ax.text(7, 3, 'G', ha="center", va="center", fontsize=40)

arrows = {0: r"$\uparrow$", 1: r"$\rightarrow$", 2: r"$\downarrow$", 3: r"$\leftarrow$"}

# all arrows

for i in range(7):

for j in range(10):

if not (j,i) == (7,3):

ax.text(j, i, arrows[Z[i,j]], ha="center", va="center", fontsize=40)

# arrows subject to upward wind with strength 1

for i in range(7):

for j in range(3, 9):

if not (j,i) == (7,3):

ax.text(j, i, arrows[Z[i,j]], ha="center", va="center", fontsize=40, color='orange')

# arrows subject to upward wind with strength 2

for i in range(7):

for j in range(6, 8):

if not (j,i) == (7,3):

ax.text(j, i, arrows[Z[i,j]], ha="center", va="center", fontsize=40, color='red')

# GRID

# Major ticks

ax.set_xticks(np.arange(0, 10, 1))

ax.set_yticks(np.arange(0, 7, 1))

# Labels for major ticks (labels start from 1 rather than 0)

# ax.set_xticklabels(np.arange(1, 11, 1))

# ax.set_yticklabels(np.arange(1, 8, 1))

# Minor ticks

ax.set_xticks(np.arange(-.5, 10, 1), minor=True)

ax.set_yticks(np.arange(-.5, 7, 1), minor=True)

ax.grid(which='minor', color='k', linestyle='-', linewidth=1)opt_path = [

(3,0),(3,1),(3,2),(3,3),(2,4),(1,5),(0,6),(0,7),(0,8),(0,9),(1,9),(2,9),(3,9),(4,9),(4,8),(3,7)]

myplot.plot_policy_windy_gridworld(

P2p,

title='Policy Function for Windy Gridworld',

highlight_cells=opt_path,

highlight_color='lightgreen');

The graphic above shows the optimal path from S to G. Note that the states with the orange arrows are subject to an upward wind drift of 1 cell, and the states with the red arrows are subject to an upward drift of 2 cells.